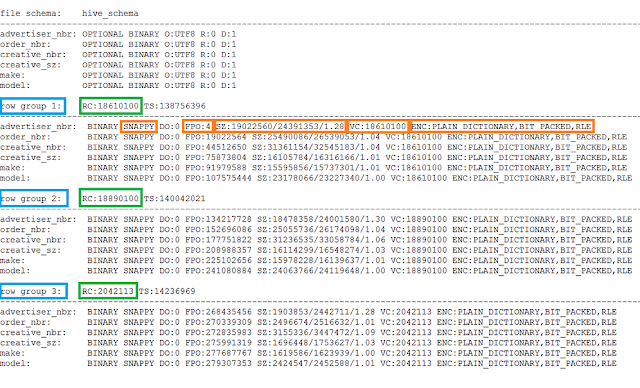

Note: PXF supports filter predicate pushdown on all parquet data types listed above, except the fixed_len_byte_array and int96 types. PXF uses the following data type mapping when reading Parquet data: Parquet Data Type An annotation identifies the original type as a DATE. For example, Parquet stores both INTEGER and DATE types as the INT32 primitive type.

#Parquet file extension how to

These annotations specify how to interpret the primitive type. Parquet supports a small set of primitive data types, and uses metadata annotations to extend the data types that it supports. To read and write Parquet primitive data types in Greenplum Database, map Parquet data values to Greenplum Database columns of the same type. PrerequisitesĮnsure that you have met the PXF Hadoop Prerequisites before you attempt to read data from or write data to HDFS. PXF currently supports reading and writing primitive Parquet data types only. This section describes how to read and write HDFS files that are stored in Parquet format, including how to create, query, and insert into external tables that reference files in the HDFS data store. Use the PXF HDFS connector to read and write Parquet-format data.

Configuring the PXF Agent Host and Port (Optional).Configuring the JDBC Connector for Hive Access (Optional).

For small integers, packing multiple integers into the same space makes storage more efficient.

#Parquet file extension 64 bits

Storage of integers is usually done with dedicated 32 or 64 bits per integer. below 10 5) that enables significant compression and boosts processing speed. Parquet has an automatic dictionary encoding enabled dynamically for data with a small number of unique values (i.e. This strategy also keeps the door open for newer and better encoding schemes to be implemented as they are invented. In Parquet, compression is performed column by column, which enables different encoding schemes to be used for text and integer data. Īs of August 2015, Parquet supports the big-data-processing frameworks including Apache Hive, Apache Drill, Apache Impala, Apache Crunch, Apache Pig, Cascading, Presto and Apache Spark.

#Parquet file extension software

Since April 27, 2015, Apache Parquet is a top-level Apache Software Foundation (ASF)-sponsored project. The first version, Apache Parquet 1.0, was released in July 2013. Parquet was designed as an improvement upon the Trevni columnar storage format created by Hadoop creator Doug Cutting. The open-source project to build Apache Parquet began as a joint effort between Twitter and Cloudera.

0 kommentar(er)

0 kommentar(er)